Software RAID 1 Configuration in Linux

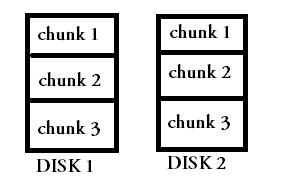

From the different level's of raid available, raid 1 is better known for redundancy without stripping. This level of raid employs mirroring, by completely replicating the entire data block by block on the one disk to the other.

The minimum number of disk required to create a raid 1 array is 2. Maximum disk number can go up to 32. Number of disk's used must always be an even number.

Different types of raid and its internal working is explained in the below post, along with a configuration post on raid 0 in linux.

Read: Raid Working: A complete Tutorial

Read: How to configure Software Raid 0 in Linux

Read:How to configure software Raid5

As per raid 1 requirement we need minimum two partition.So we create two partition /dev/sda8 and /dev/sda9 and change type of partition to raid type.

[root@localhost ~]# fdisk /dev/sda The number of cylinders for this disk is set to 19457. There is nothing wrong with that, but this is larger than 1024, and could in certain setups cause problems with: 1) software that runs at boot time (e.g., old versions of LILO) 2) booting and partitioning software from other OSs (e.g., DOS FDISK, OS/2 FDISK) Command (m for help): n First cylinder (18895-19457, default 18895): Using default value 18895 Last cylinder or +size or +sizeM or +sizeK (18895-19457, default 19457): +100M Command (m for help): n First cylinder (18908-19457, default 18908): Using default value 18908 Last cylinder or +size or +sizeM or +sizeK (18908-19457, default 19457): +100M Command (m for help): t Partition number (1-9): 9 Hex code (type L to list codes): fd Changed system type of partition 9 to fd (Linux raid autodetect) Command (m for help): t Partition number (1-9): 8 Hex code (type L to list codes): fd Changed system type of partition 8 to fd (Linux raid autodetect) Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. WARNING: Re-reading the partition table failed with error 16: Device or resource busy. The kernel still uses the old table. The new table will be used at the next reboot. Syncing disks. [root@localhost ~]#

Explanation:

fdisk: tool to create new partition

n : means create a new partition

8,9 : are partition numbers

+100M: size of partition

t : means change the type of mentioned partition

fd: code for raid type partition

w: is to save the change in table

Use partprobe command to update the Partition Table without Reboot and then view the table.

[root@localhost ~]# partprobe [root@localhost ~]# fdisk -l Disk /dev/sda: 160.0 GB, 160041885696 bytes 255 heads, 63 sectors/track, 19457 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sda1 * 1 13 102400 7 HPFS/NTFS Partition 1 does not end on cylinder boundary. /dev/sda2 13 3825 30617600 7 HPFS/NTFS /dev/sda3 3825 11474 61440000 7 HPFS/NTFS /dev/sda4 11475 19457 64123447+ 5 Extended /dev/sda5 11475 18868 59392273+ 83 Linux /dev/sda6 18869 18881 104391 fd Linux raid autodetect /dev/sda7 18882 18894 104391 fd Linux raid autodetect /dev/sda8 18895 18907 104391 fd Linux raid autodetect /dev/sda9 18908 18920 104391 fd Linux raid autodetect Disk /dev/md0: 213 MB, 213647360 bytes 2 heads, 4 sectors/track, 52160 cylinders Units = cylinders of 8 * 512 = 4096 bytes Disk /dev/md0 doesn't contain a valid partition table

Now Create RAID 1 Device called it /dev/md1:

[root@localhost ~]# mdadm --create /dev/md1 --level=1 --raid-devices=2 /dev/sda8 /dev/sda9

Now to see the information about created raid device /dev/md1 in detail use following command:

[root@localhost ~]# mdadm --detail /dev/md1 /dev/md1: Version : 0.90 Creation Time : Tue Apr 9 14:50:00 2013 Raid Level : raid1 Array Size : 104320 (101.89 MiB 106.82 MB) Used Dev Size : 104320 (101.89 MiB 106.82 MB) Raid Devices : 2 Total Devices : 2 Preferred Minor : 1 Persistence : Superblock is persistent Update Time : Tue Apr 9 14:50:07 2013 State : clean Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 UUID : 034c85f3:60ce1191:3b61e8dc:55851162 Events : 0.2 Number Major Minor RaidDevice State 0 8 8 0 active sync /dev/sda8 1 8 9 1 active sync /dev/sda9

[root@localhost ~]# cat /proc/mdstat Personalities : [raid0] [raid1] md1 : active raid1 sda9[1] sda8[0] 104320 blocks [2/2] [UU] md0 : active raid0 sda7[1] sda6[0] 208640 blocks 64k chunks unused devices: <none>

From above output one can clearly understand that raid1 have been created and using partitions /dev/sda8 and /dev/sda9.

FORMAT RAID DEVICE /dev/md1:

[root@localhost ~]# mke2fs -j /dev/md1 mke2fs 1.39 (29-May-2006) Filesystem label= OS type: Linux Block size=1024 (log=0) Fragment size=1024 (log=0) 26104 inodes, 104320 blocks 5216 blocks (5.00%) reserved for the super user First data block=1 Maximum filesystem blocks=67371008 13 block groups 8192 blocks per group, 8192 fragments per group 2008 inodes per group Superblock backups stored on blocks: 8193, 24577, 40961, 57345, 73729 Writing inode tables: done Creating journal (4096 blocks): done Writing superblocks and filesystem accounting information: done This filesystem will be automatically checked every 20 mounts or 180 days, whichever comes first. Use tune2fs -c or -i to override.

Now create a directory /raid1 and mount /dev/md1 on it.

[root@localhost ~]# mkdir /raid1 [root@localhost ~]# mount /dev/md1 /raid1 [root@localhost ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/sda5 55G 18G 35G 34% / tmpfs 502M 0 502M 0% /dev/shm /dev/sda3 59G 31G 28G 53% /root/Desktop/win7 /dev/md0 198M 5.8M 182M 4% /raid0 /dev/md1 99M 5.6M 89M 6% /raid1 [root@localhost ~]

Above output clearly show that raid1 is successfully created and mounted on /raid1.

HARD DISK FAILURE & RECOVERY TEST

Now what will happen with the data on /raid1 directory, if any of disk fail or crash?

Will raid1 protect the data from such loss?

To get the answer of the above question we will make some files on /raid1 directory. After that we manually delete or remove any one hard disk from raid1 devices and then check the contents status.

[root@localhost ~]# cd /raid1

[root@localhost raid1]# touch {a..z}

[root@localhost raid1]# ls

a b c d e f g h i j k l lost+found m n o p q r s t u v w x y z

[root@localhost raid1]#

Now we are going to fail /dev/sda9 partition from raid1 and then check the status of data.

[root@localhost ~]# mdadm /dev/md1 --fail /dev/sda9 mdadm: set /dev/sda9 faulty in /dev/md1 [root@localhost ~]# mdadm --detail /dev/md1 /dev/md1: Version : 0.90 Creation Time : Tue Apr 9 14:50:00 2013 Raid Level : raid1 Array Size : 104320 (101.89 MiB 106.82 MB) Used Dev Size : 104320 (101.89 MiB 106.82 MB) Raid Devices : 2 Total Devices : 2 Preferred Minor : 1 Persistence : Superblock is persistent Update Time : Tue Apr 9 15:47:22 2013 State : clean, degraded Active Devices : 1 Working Devices : 1 Failed Devices : 1 Spare Devices : 0 UUID : 034c85f3:60ce1191:3b61e8dc:55851162 Events : 0.4 Number Major Minor RaidDevice State 0 8 8 0 active sync /dev/sda8 1 0 0 1 removed 2 8 9 - faulty spare /dev/sda9

[root@localhost ~]# ls -l /raid1 total 12 -rw-r--r-- 1 root root 0 Apr 9 15:43 a -rw-r--r-- 1 root root 0 Apr 9 15:43 b -rw-r--r-- 1 root root 0 Apr 9 15:43 c -rw-r--r-- 1 root root 0 Apr 9 15:43 d -rw-r--r-- 1 root root 0 Apr 9 15:43 e -rw-r--r-- 1 root root 0 Apr 9 15:43 f -rw-r--r-- 1 root root 0 Apr 9 15:43 g -rw-r--r-- 1 root root 0 Apr 9 15:43 h -rw-r--r-- 1 root root 0 Apr 9 15:43 i -rw-r--r-- 1 root root 0 Apr 9 15:43 j -rw-r--r-- 1 root root 0 Apr 9 15:43 k -rw-r--r-- 1 root root 0 Apr 9 15:43 l drwx------ 2 root root 12288 Apr 9 15:21 lost+found -rw-r--r-- 1 root root 0 Apr 9 15:43 m -rw-r--r-- 1 root root 0 Apr 9 15:43 n -rw-r--r-- 1 root root 0 Apr 9 15:43 o -rw-r--r-- 1 root root 0 Apr 9 15:43 p -rw-r--r-- 1 root root 0 Apr 9 15:43 q -rw-r--r-- 1 root root 0 Apr 9 15:43 r -rw-r--r-- 1 root root 0 Apr 9 15:43 s -rw-r--r-- 1 root root 0 Apr 9 15:43 t -rw-r--r-- 1 root root 0 Apr 9 15:43 u -rw-r--r-- 1 root root 0 Apr 9 15:43 v -rw-r--r-- 1 root root 0 Apr 9 15:43 w -rw-r--r-- 1 root root 0 Apr 9 15:43 x -rw-r--r-- 1 root root 0 Apr 9 15:43 y -rw-r--r-- 1 root root 0 Apr 9 15:43 z

Hence we can clearly see that even after the removal of one partition(/dev/sda9) our data is still safe because of mirroring.

Now what if you want to add a new partition or hard disk in place of faulty spare?

INSERTING A NEW HARD DISK:

- first create a new partition.

- change type of partition to raid type

- Then add the created partition to existing raid.

Follow the exact same steps shown in the beginning of this tutorial to create partition and modifying its type to RAID. Suppose the newly created partition is /dev/sda10

Now add this newly created partition to existing raid using mdadm command:

[root@localhost ~]# mdadm /dev/md1 --add /dev/sda10 mdadm: added /dev/sda10

Hence newly created partition with raid type have been successfully addes to raid1.

[root@localhost ~]# cat /proc/mdstat Personalities : [raid0] [raid1] md1 : active raid1 sda10[1] sda9[2](F) sda8[0] 104320 blocks [2/2] [UU] md0 : active raid0 sda7[1] sda6[0] 208640 blocks 64k chunks unused devices: <none> [root@localhost ~]#

KNOW THE ADVANTAGES OF RAID 1:

- When you go through this article you will observe that the RAID 1 technology is very simple.

- The most important advantages of using RAID 1 is that in case of a disk failure,data do not have to be rebuild.They just copied to the replacement disk.

- Read & Write speed is excellent in comparison to that of a single disk.

LEARN THE DISADVANTAGES OF RAID 1:

- The most worst disadvantages of RAID1 is that it can only uses 50% of Storage Device because every data has to be written twice.

Sarath Pillai

Sarath Pillai Satish Tiwary

Satish Tiwary

Comments

adding disk to raid

if i want to add new disk/partition to raid array, should i write filesystem on it or not? like you said make new partition, set its type to 'fd' and add it with mdadm command but what about file system? whether mdadm will automatically write ext3 filesystem on the added device/partition? waiting for your valuable comments.

adding disk to raid

Hi viki,

When we use RAID for storage, we never format the member disks. Because the virtual disk that will be finally exposed to the operating system will be the raid device we make(Which will be made from multiple hard disk, and depending upon the raid level.)

Formatting is irrelevant in case of raid, because the individual disk's(Physical disks) are never exposed to the operating system. Only virtual disk's are exposed to the operating system, on which formatting/file system creation is done so that a user can access it.

In our example, we are using partitions instead of physical/separate hard disks. Using partitions from a single hard disk to create raid, will not increase the performance of the raid device. We have used partitions just to show this example.

Hope this answer's the question..

Add new comment