How To Configure MySQL Cluster with Multiple Masters on Ubuntu 16.04

MySQL is one of the popular Relational database management systems out there. Installing and configuring MySQL is quite straightforward and simple. For smaller websites and blogs, the standard setup of a single MySQL server works perfectly well. But creating a high availability setup with multiple masters is quite tricky. The popular model that people generally use for a multi node setup in MySQL is master/slave setup (where the slave is generally used for read only operations to reduce number of requests on the master).

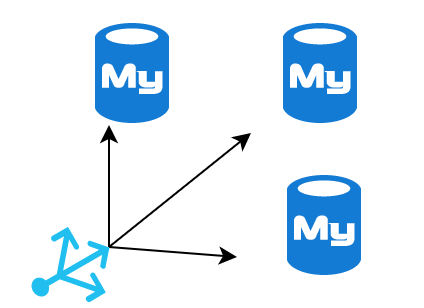

In this article, we will be discussing a method to create a multi master MySQL cluster (where read/write requests can be served by any node in the cluster).

To achieve this, we will be using MySQL Galera cluster. Unlike the standard MySQL replication, Galera cluster will enable both read and write to multiple MySQL nodes at the same time. We will be using the standard haproxy setup for load balancing traffic across these MySQL nodes.

Prerequisites

In order to complete this guide, you will need to create 4 ubuntu 16.04 servers in your environment. Please note the fact that all these servers should be located in the same LAN for better access.

3 servers for MySQL Galera Cluster(Ubuntu 16.04)

1 Haproxy (Ubuntu 16.04)

Let’s first setup the 3 servers for MySQL.

Step 1 - Ensure Galera / MySQL Repository is enabled on all three servers

For installing galera cluster using apt-get, the first step that we need to execute is to add the apt repository provided by galeracluster.com. The standard MySQL package from ubuntu apt repository does not work well with Galera cluster (as it requires a patch). So it is advisable to enable galeracluster.com repository as shown below.

sudo apt-get update && apt-get install software-properties-common

The above will command will install the required tooling to add/modify external software repositories.

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv BC19DDBA

We need to now add the galera repository details to apt configuration as shown below.

sudo echo "deb http://releases.galeracluster.com/mysql-wsrep-5.6/ubuntu xenial main" >> /etc/apt/sources.list.d/galera.list

sudo echo "deb http://releases.galeracluster.com/galera-3/ubuntu xenial main" >> /etc/apt/sources.list.d/galera.list

In order to ensure that galera repository is preferred among other repositories we need to create a preference file for apt.

echo -e "Package: *\nPin: origin releases.galeracluster.com\nPin-Priority: 1001" > /etc/apt/preferences.d/galera.pref

A final apt-get update will ensure that the apt cache has the newly added repository packages in the list.

sudo apt-get update

Step 2 - Install Galera / MySQL on all three servers

Now we need to install galera with its dependencies on all the three nodes. It is a simple apt-get command away. Please note the fact that the below command will require you to set a MySQL root password(you can either keep the same or even different root passwords on these three nodes, as this will be changed later when we form the cluster).

sudo apt-get install galera-3 galera-arbitrator-3 mysql-wsrep-5.6 rsync

Step 3 - Configure Galera / MySQL Cluster on all three servers

MySQL comes with an include directory setting for /etc/mysql/conf.d/. This means any file sitting inside /etc/mysql/conf.d/, with an extension of .cnf will be read by MySQL during startup. For galera cluster, we will be creating a file inside this directory.

sudo vim /etc/mysql/conf.d/galera.cnf

The content should be as below on the first server.

[mysqld] binlog_format=ROW default-storage-engine=innodb innodb_autoinc_lock_mode=2 bind-address=0.0.0.0 # Galera Specific Config wsrep_on=ON wsrep_provider=/usr/lib/galera/libgalera_smm.so wsrep_cluster_name="testing_db" wsrep_cluster_address="gcomm://10.132.18.15,10.132.65.15,10.132.12.213" wsrep_sst_method=rsync wsrep_node_address="10.132.18.15" wsrep_node_name="db1"

Please note the fact that you need to replace 10.132.18.15,10.132.65.15,10.132.12.213 with the correct private IP addresses of your droplets. We have named our cluster “testing_db” and this first node will be named db1.

The last two lines will be different on the three servers. Rest will remain the same. On the second server, the last two lines will be as below.

wsrep_node_address="10.132.65.15" wsrep_node_name="db2"

And on the third server, those two lines will be as below.

wsrep_node_address="10.132.12.213" wsrep_node_name="db3"

Step 4 - Form Cluster between the nodes

Stop MySQL on all the three nodes.

sudo systemctl stop mysql

Now we need to initialize the cluster by bringing up the very first node using the “wsrep-new-cluster” command as shown below. On the first node (db1), run the below.

/etc/init.d/mysql start --wsrep-new-cluster

On the remaining two nodes, simply execute the systemctl mysql start command as shown below.

systemctl start mysql

Once this is done, all the nodes should show cluster size value of 3. Cluster size can be found by executing the below shown SQL query (on any nodes).

mysql -u root -p -e "SHOW STATUS LIKE 'wsrep_cluster_size'"

Step 5 - Create an HAProxy Node to load balance the traffic between the MySQL servers.

The three MySQL galera nodes that we created above will act as database nodes. MySQL read and write requests can be sent to any node, and the replication will automatically happen between these nodes. Unlike the standard MySQL master slave setup, all these three nodes can be used for read and write.

To make our database setup highly available, we need a load balancer in front of these nodes which can route traffic to any of the nodes depending upon their availability. For load balancer, we will use Haproxy.

Note: We are going to now setup the 4th Ubuntu 16.04 droplet, which is going to be our haproxy load balancer. Please note the fact that this droplet needs to be in the same datacenter as our galera nodes, and also needs to have private networking enabled.

apt-get update && apt-get install haproxy

A user needs to be created on the MySQL galera nodes, which will be used by haproxy to do the healthcheck. It is a regular MySQL user. Execute the below on any one MySQL node(it will automatically sync to other nodes, as we have the cluster formed earlier).

mysql -u root -p Enter password: mysql> CREATE USER 'haproxy'@'10.131.63.83'; Query OK, 0 rows affected (0.01 sec)

10.131.63.83 is our haproxy private ip address.

We need to also create an admin mysql user to test connectivity from haproxy node. This can be done by executing the below on any galera node.

mysql -u root -p -e "GRANT ALL PRIVILEGES ON *.* TO 'haproxy_admin'@'10.131.63.83' IDENTIFIED BY 'password' WITH GRANT OPTION; FLUSH PRIVILEGES"

As mentioned earlier, 10.131.63.83 is our haproxy private ip address. The above query will permit logins to MySQL servers from the IP address 10.131.63.83(which is our haproxy) as haproxy_admin user.

Haproxy node can now be configured to point to our three database nodes. Append the below content to the file /etc/haproxy/haproxy.conf.

listen testgalera bind 10.131.63.83:3306 balance source mode tcp option tcpka option mysql-check user haproxy server db1 10.131.60.8:3306 check weight 1 server db2 10.131.60.35:3306 check weight 1 server db3 10.131.61.117:3306 check weight 1

In the above configuration, we have explicitly asked haproxy to make port 3306 available only over the private ip address (evident from the line bind 10.131.63.83:3306). The last three lines are our backend galera MySQL nodes.

Also we have specified mysql-check using the user haproxy.

Restart Haproxy

service haproxy restart

We can confirm if the request is already being served by multiple nodes by executing the below query using haproxy IP address.

mysql --host=10.131.63.83 -u haproxy_admin -ppassword -e 'SELECT VARIABLE_VALUE as "backend ID" FROM INFORMATION_SCHEMA.GLOBAL_STATUS WHERE VARIABLE_NAME="wsrep_gcomm_uuid"'

The above command should return different ID’s for multiple requests. Which indicates the request is being served by different backend nodes. From this point onwards, to access MySQL, we will be using our HaProxy ip address.

Sarath Pillai

Sarath Pillai Satish Tiwary

Satish Tiwary

Add new comment