GFS - Gluster File System - A complete Tutorial Guide for an Administrator

Storage becomes a challenge when size of the data you are dealing with becomes large. There are several possible solutions that can rescue from such problems. In this post we will be discussing one such open source solution.

Let's discuss why finding a storage solution is very much important when the size of your data becomes large. Let's say you have large RAID array that has a capacity of around 10 TB and is exposed to one of your server, from where other clients are mounting that volume. Now all the read and write operations that the NFS clients issue, has to be completed at the server end by the server NFS process.

Imagine if the clients are doing a heavy read write operation all simultaneously at the same time on the NFS share. In such cases the NFS server will be under work load and also will be slow on resources(memory and processing power). What if you can combine the memory and processing power of two machine's and their individual discs to form a volume that can be accessed by clients.

So basically the idea is to aggregate multiple storage servers with the help of TCP or Infiniband(A high performance link used in data Centre's to provide fast, and high throughput connection ) and make a very large storage.

GlusterFS does the exact same thing of combining multiple storage servers to form a large storage. Let's see some important and noteworthy points about GlusterFS.

- Its Open Source

- You can deploy GlusterFS with the help of commodity hardware servers

- Linear scaling of performance and storage capacity

- Scale storage size up to several petabytes, which can be accessed by thousands for servers.

A good storage solution must provide elasticity in both storage and performance without affecting active operations.

Yeah that's correct, elasticity in both storage size and performance is the most important factor that makes a storage solution successful. Although most storage solutions do mention linearity as a key term in their product details, 80 percent of them does not give you the absolute linearity that's required.

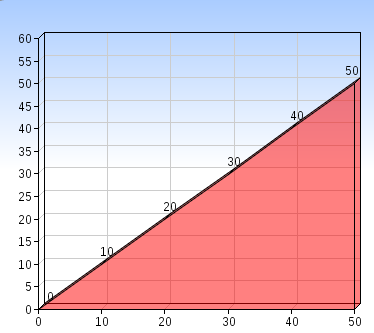

Let's first understand what is linearity?

Linearity in storage means that if i have a storage size of 10 TB, which provides me the performance rate of 10 MB/s, increasing the size of the storage to 20 TB should give me 20 MB/s performance rate. However this kind of linear scaling does not happen most of the times. And a storage solution that does not provide that kind of linearity is abusing the term "linear"

If you see in the above graph, the X axis is the storage size and the Y axis is the performance meter. In order to achieve linearity as shown in the graph, both the them should go equaly together, which does not happen in real world most of the times.

However that linearity can be achieved to a certain extent with the help of glusterfs file system. It works something like the below.

You can add more disks if your organization wants to increase storage size, without affecting performance. And you can increase the nodes (servers) taking part in the storage if your organization wants to improve performance. Performance in gluster is the combined memory and processing power of all nodes taking part in the storage cluster. You can also increase both the storage size as well as performance by adding more nodes and more disks per nodes. Similary you can cut down costs by removing nodes or removing disks.

There is a principle that must be kept in mind while working with glusterfs. This is explains the working architecture of gluster rather than a principle.

More number of nodes = More storage size & More Performance & More IO

Let's discuss this performance vs storage size thing and understand it with the help of couple of diagrams.

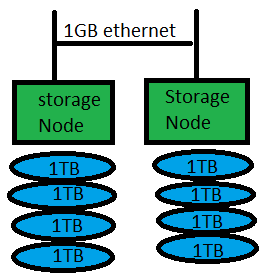

In this shown configuration, we have the following things to note.

In this shown configuration, we have the following things to note.

Server nodes: 2

Disk Drives: 4x1TB drives

Total Storage Size: 8TB

Network Speed: 1G

Total achieved Speed: 200 MB/s

The above configuration can be modified by adding more 1 TB drives on each server inroder to increase the storage size, without affecting performance. However if you want to improve performance of the same storage shown above, without increasing the size of the storage, we need to add two more server nodes.

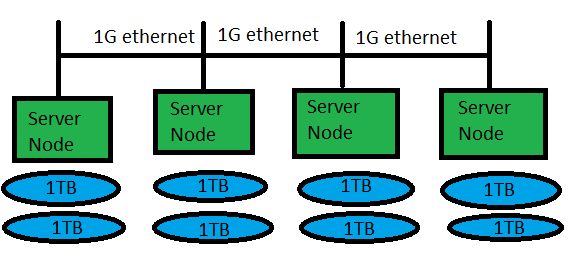

The configuration will look something like the below diagram.

The things to note about this configuration is as below.

The things to note about this configuration is as below.

Disk Drives: 2 x 1TB

Total Storage Size: 8TB(which is same as the previous configuration)

Network Speed: 1 G

Total Achieved Speed: 400MB/S

So in the above configuration we can achieve the same storage size with the improved performance of 400 MB/s by increasing the number of node servers in the configuration.

However if you go on increasing the number of nodes, you need to keep the fact in mind that our network is only of 1G. So after improving performance to a certain extent, network speed will become a bottleneck. In order to solve that, we need to upgrade our network to 10 G.

The combined processing power, and memory of all the nodes in the system contributes to the improved performance of glusterfs. Glusterfs has been a popular choice for good performance storage in the range of petabytes. The configuration that you should deploy on your environment depends on the kind of operation and requirement you have.

In our article about NFS we have discussed VFS (Virtual File System), which is an interface between the kernel and different file system's in Linux. In simple words we can say that VFS is nothing but a single interface for applications to use without worrying about the underlying type of file system. Remember the fact that the actual write and read operation is not done by the VFS itself. However VFS just hands over the operation requested by the user or application to the underlying file system module inside the kernel.

Read: Understanding Network File System

Let's first discuss something called as FUSE before going to installation and configuration of gluster file system. Computer memory is divided into two parts called as User Space & Kernel Space. The name itself suggests that user space consists of memory location allocated to programs, and kernel space is reserved for kernel to run.

Now most of the file system code(the program that actually does the job on the file system) is inside the kernel in the form of a module. So basically if you need to make your own file system, then a kernel module for that specific file system must be made part of the kernel.

However there are easy methods to achieve this without touching or writing code for a kernel module. As we mentioned before, the area in memory isolated for kernel is called as kernel space, and you can make your own file system code inside user space without touching kernel space. You might be thinking how will your file system become part of VFS?

There is a mechanism available in the form of a module called FUSE(File System in User Space), to run your own file system code without touching the kernel. This module will provide a bridge between your file system and VFS. Hence you can make any new file system and make that compatible with Linux, with the help of FUSE module. This module is used for mounting very large number of file system's in Linux, some of them are mentioned below.

- NTFS 3g

- SSHFS

- HDFS

- GlusterFS

If you have carefully read the above list of file system that runs with the help of FUSE, glusterfs is also included. This is the reason why we were discussing about VFS, user space & Kernel Space, and finally FUSE. glusterfs requires FUSE.

Glusterfs is widely adopted by Red Hat, for their Red Hat Enterprise Storage solutions, and recommends to use that where scaling and elasticity is very much required. As FUSE is a prerequisite for gluster file system, lets first install FUSE module.

[root@server1 ~]# yum install fuse fuse-libs

Note: Please don't forget to install ATRPM repository, before installing fuse. You can follow this document for installing ATRPM

For installing packages, you can download the core packages from the official site.

Before installing gluster, let's make our configuration pretty clear. We will be having two servers called server1 & server2, which will be our server nodes. These two server nodes will be combinely making a storage volume, which our client server will be mounting.

We need to first install the below packages, available form glusterfs official site.

glusterfs

- glusterfs-cli

- glusterfs-fuse

- glusterfs-libs

- glusterfs-server

The above packages needs to be installed on the server that will be acting as glusterfs server nodes(server1 and server2 in our case). glusterd service is the main service that is responsible for volume management. Hence the first thing that we need to do is to start the glusterd service, which can be done as shown below. Please remember the fact that we need to start glusterd service on all node servers.

[root@server1 ~]# /etc/init.d/glusterd start Starting glusterd: [ OK ] [root@server2 ~]# /etc/init.d/glusterd start Starting glusterd: [ OK ]

So as we have started our glusterd service on both server1 and server2, let's now make a storage pool with these two servers as members in that pool.

For showing this example tutorial of glusterfs i will be using 100 MB partitions on each server. So we will be having a volume with 200MB to mount on the client server(However this depends on the type of gluster you are using, we will discuss that shortly.)

Let's first create a storage pool before going ahead. This storage pool will simply authorize your required servers, as part of that storage pool. Please remember the fact that you need to have perfect DNS setup, if you are going to use host names while adding servers to the pool. In my case i have added these host names inside /etc/hosts file.

[root@server1 ~]# gluster peer probe server2 peer probe: success [root@server1 ~]#

Please remember the fact that you need to modify the firewall rules to allow servers in the pool to probe. Otherwise you might get an error something like the below.

[root@server1 ~]# gluster peer probe server2 peer probe: failed: Probe returned with unknown errno 107

So in our storage pool we have two servers server1 and server2. You might be thinking, that i have not added the server1 through the same probe command. That's because localhost by itself will become part of the pool. Even if you try to add it you will get a message like the below one.

[root@server1 ~]# gluster peer probe server1 peer probe: success: on localhost not needed [root@server1 ~]#

Now let's make a volume that will contain partitions/share from both the node servers(bricks), which will combinely make the gluster volume for the clients to mount. The below command can be used to create the gluster volume with 1 brick from each node server. exp1 and exp2 used below are two partitions of 100M size formatted with XFS file system. You can use shares with even ext2 & ext3 as well.

[root@server1 ~]# gluster volume create test-volume server1:/exp1/ server2:/exp2 Creation of volume test-volume has been successful. Please start the volume to access data. [root@server1 ~]#

Let's see the complete information of this newly created volume with the help of gluster command. This command shown below will give you the complete information about the volume.

[root@server1 ~]# gluster volume info Volume Name: test-volume Type: Distribute Volume ID: 6d651bbf-cb8f-4db0-9d25-7f6a852add4f Status: Started Number of Bricks: 2 Transport-type: tcp Bricks: Brick1: server1:/exp1 Brick2: server2:/exp2

Some of the critical information shown by the above command are mentioned below.

Volume Name: Specifies the volume name

Volume ID: Specifies the unique volume ID

Status: Shows whether the volume is started or not

Number of Bricks: Tells the total number of bricks taking part in making the volume

Type: This tells you the type of volume. This field needs a little more explanation as there is a large difference between different types of volumes in gluster. We will be discussing one by one differently in this article. The default one is "distributed"

Transport: This tells the type of transport mechanism used to establish communication between the server nodes taking part in the volume. There are different types of transport methods that can be used. One is TCP, the other is high speed Infiniband cable connection between the server nodes(which requires the specific infiniband drivers to be installed on both the servers). If you do not specify the transport method during volume creation the default one is selected, which is TCP in our case.

Different types of volumes in gluster file system

As we have seen in the previous volume info command, there is something called as type of the volume. The selection depends upon the requirement. Some of them are good for scaling storage size, while some of them are good for performance, and you can also use combination of two types together to get both the advantages.

Let's discuss one by one.

Distributed Glusterfs Volume

Distributed Gluster volume's are used to spread files randomly across the number of bricks in the volume. In other words, file 1 might be stored in the first brick, and file 2 might be stored in the other brick. There is no redundancy provided by gluster if you have created a distributed volume. The main purpose behind making a distributed storage volume with gluster is to easily scale the volume size.

If you did not specify any option while creating the volume, the default option is to create a distribute type of volume. Please keep the fact in mind that distributed volume does not provide any data redundancy, which means a brick failure will cause a complete data loss. You need to rely on the underlying hardware for data loss protection in distributed volume.

So if you are using RAID for your bricks in distributed volume, then with the redundancy provided by RAID, your bricks can be saved from disaster. Our previously created volume without any option is a distributed volume.

Summery of distributed Volume are mentioned below.

- Files are randomly distributed over the bricks in distributed volume

- There is no redundancy

- Data loss protection is provided by the underlying hardware(no protection from gluster )

- Best for scaling size of the volume

Replicated Volumes in gluster file system

Replicated volumes are made for better reliability and data redundancy. So even if one brick is failed, then also the data is protected and safe, and is also accessible.

Exact copy of data is maintained on all the bricks in replicated volume. But yeah the number of replica that you want to create with the replicated volume option is your choice. In simple words, you need to have two bricks for creating a replicated volume with 2 replicas.

Let's see how to create a replicated volume in glusterfs. It's very much similar to the previous command used for creating default distributed volume, with an added option of replica count and type. The command is shown below.

[root@server1 ~]# gluster volume create test-volume replica 2 server1:/exp1 server2:/exp2

The above command will create a replicated volume with the name of test-volume with two replica's stored in brick server1:/exp1 & brick server2:/exp2

As mentioned earlier, if you do not mention the transport type, default value of TCP is taken.

Summery of Replicated volume:

- Useful where availability is the priority

- Useful where redundancy is the priority

- Number of bricks should be equal to number of replicas. If you want 5 replica then you need to have 5 bricks

Striped Volumes in Gluster File system

Striped volume stores data in the bricks after dividing into different stripes. So imagine if you have a large file and that's stored in the first brick, and you have thousands of clients accessing your volume at the same time. Due to large number of clients accessing the same file in the same brick the performance will be reduced.

For this a good solution is to stripe the file into smaller chunks and store it in differnt bricks (due to which the load will be distrubuted among bricks and file can be fetched faster). This is what happens in striped volume. The stripe count you give as an argument while creating striped volume must be equal to the number of bricks in the volume. Creating a striped volume is very much similar to creating replicated volume. Striped volume can be created by the below command.

[root@server1 ~]# gluster volume create test-volume stripe 2 server1:/exp1 server2:/exp2

In the above shown example, we have asked to keep the stripe count to 2, due to which we need two bricks.

Summery of striped volume in gluster file system

- Striped volume does not provide redundancy

- disaster in one brick can cause data loss

- number of stripe must be equal to number of bricks

- provides added performance if large number of clients are accessing the same volume

Distributed Striped Volumes in Gluster File System

Distributed striped volumes are very much similar to striped volume, with an added advantage that you can distribute the stripes across more number of bricks on more nodes. In other words, you can distribute data with 4 stripes onto 8 servers.

So in simple words if you want to create a distributed striped volume then you will have to create a striped volume with 2 stripes and with 4 bricks. Basically if your number of bricks in the volume is double the number of stripes then your volume type is distributed striped.

Basically you can distribute the stripes across several bricks. Even if you want to increase the number of bricks later to increase the volume size, you need to add more bricks in the multiple of stripe number.

The below command can be used to create a distributed striped volume.

[root@server1 ~]# gluster volume create test-volume stripe 2 192.168.30.132:/exp1/ 192.168.30.132:/exp3/ 192.168.30.133:/exp2/ 192.168.30.133:/exp4 Creation of volume test-volume has been successful. Please start the volume to access data.

If you see the above command, we have used a stripe count of 2 and total number of bricks used is 4(which is a multiple of stripe number 2). If you create a volume with two bricks and a stripe count of 2, it will make a striped volume and not a distributed striped volume.

Let's see the info of our distributed striped volume to be doubly sure.

[root@server1 ~]# gluster volume info Volume Name: test-volume Type: Distributed-Stripe Volume ID: 7ba49f31-d0cf-47d0-8aed-470a9fffab72 Status: Created Number of Bricks: 2 x 2 = 4 Transport-type: tcp Bricks: Brick1: server1:/exp1 Brick2: server1:/exp3 Brick3: server2:/exp2 Brick4: server2:/exp4

You can clearly see that the TYPE field of Distributed-Stripe.

Summery of Distributed Striped Volume

- It stripes files across multiple bricks

- Good for performance in accessing very large files

- Brick count must always be in multiple of stripe count

Distributed Replicated Volumes in Gluster

Distributed replicated volumes are used to store files in replicated bricks. It is very much similar to distributed striped volume. In distributed replicated volumes bricks must be a multiple of replica count. Files are distributed over replicated sets of bricks. It is used for an environment where high availability due to redundancy and scaling storage is very much critical.

An extra care must be taken while adding bricks while creating distributed replicated volumes. In fact i must say the order in which you specify bricks matters a lot. let's create a distributed replicated volume and see what happens.

[root@server1 ~]# gluster volume create test-volume replica 2 server1:/exp1/ server1:/exp3/ server2:/exp2/ server2:/exp4 Multiple bricks of a replicate volume are present on the same server. This setup is not optimal. Do you still want to continue creating the volume? (y/n) y Creation of volume test-volume has been successful. Please start the volume to access data. [root@server1 ~]#

Now in the above command we have created a distributed replicated volume named test-volume 4 bricks. The order of the bricks given in the command is as shown below.

server1:/exp1/ server1:/exp3/ server2:/exp2/ server2:/exp4

That order means two replica sets of bricks "server1:/exp1/ server1:/exp3/ " & "server2:/exp2/ server2:/exp4"

Hence the adjacent bricks given in the command are considered part of the replica. So if you have a replica count of 3 and you have 9 disks then the first 3 bricks, in the same order given by you becomes the first replica, the second three bricks becomes the second replica and so on.

[root@server1 ~]# gluster volume info Volume Name: test-volume Type: Distributed-Replicate Volume ID: 355f075d-e714-4dbf-af18-b1c586f0736a Status: Created Number of Bricks: 2 x 2 = 4 Transport-type: tcp Bricks: Brick1: server1:/exp1 Brick2: server1:/exp3 Brick3: server2:/exp2 Brick4: server2:/exp4 [root@server1 ~]#

The above volume info command shows that its of the type Distributed-Replicate.

Summery of Distributed Replicated Volume

- Data is distributed across replicated sets

- Good redundancy and good scaling

We have seen 5 different types of volume that can be made with different number of bricks. However we have not seen how to start those volume so that clients can mount it and use that volume.

How to start a volume in glusterfs

A glusterfs volume can be started by the below command. You can start your volume by this command for any type of volume you have created.

[root@server1 ~]# gluster volume start test-volume Starting volume test-volume has been successful [root@server1 ~]#

Now as our volume is started, it can now be used by the clients. Let's see how.

How to access a glusterfs volume

The primary method recommended for accessing glusterfs volume is through glusterfs client. Glusterfs client must be installed on the clients who require access to the volume. However this is the best method of all for accessing gluster volumes for the following reasons.

- It provides concurrency and performance over other options

- Transparent failover in GNU Linux.

Another method that can be used to access glusterfs volume is through NFS. Also gluster volume can be accessed by CIFS in windows.

Let's see the recommended method of accessing glusterfs volume with glusterfs client. Let's access our test-volume, with glusterfs client on Linux machine.

For this to work properly, you need to have the below packages on the client.

- fuse

- fuse-libs

- openib (for infiniband)

- libibverbs(for infiniband)

- glusterfs-core

- glusterfs-rdma

- glusterfs-fuse

The below command can be used to mount the gluster volume from the any of the server containing the brick. The glusterfs internally will communicate between all the nodes containing bricks to distribute the load.

[root@client ~]# mount.glusterfs server1:/test-volume /mnt

In the similar method, any number of clients can mount this same volume with the same command shown above.

Let's now see methods used to increase and decrease size of a gluster volume.

How to expand a gluster volume

You might be interested in adding more bricks to your volume to increase the size of your volume. The first step in adding more bricks is to probe that server(whether its a new node or old probe the server first).

[root@server1 ~]# gluster peer probe server2 Probe on host 192.168.30.133 port 24007 already in peer list

Now add your required new brick with the below command.

[root@server1 ~]# gluster volume add-brick test-volume server2:/exp5

Now recheck the volume information by the previously shown command gluster volume info. If you are able to see the newly added brick, then well and good. Let's now rebalence the volume.

[root@server1 ~]# gluster volume rebalance test-volume start Starting rebalance on volume test-volume has been successful

Please dont forget to add bricks in the multiple of stripes and replicas if you are increasing size of a replicated distributed or striped distributed volumes.

After rebalencing is done, layout of data must be fixed, so that files which will be newly added to the old directories can go to the new bricks also.

[root@server2 ~]# gluster volume rebalance test-volume fix-layout start

However even after the above command, although the layout structure of old directories now include new bricks, the actual data inside them is yet to be migrated. Hence we need to apply the below command.

[root@server1 ~]# gluster volume rebalence test-volume migrate data start

The rebalence status can be found with the command "gluster volume rebalence test-volume status".

How to shrink a gluster volume

Shrinking of a gluster volume can be done exactly the same way as we expanded the size of the volume, with slight changes in the command used. Shrinking will be done by removing bricks taking part in the volume.

[root@server1 ~]# gluster volume remove-brick test-volume server2:/exp4

Now you can check the volume info from the volume info command. Also do remember the fact that if you are shrinking distributed replicated or distributed striped volumes, then remove bricks in the multiple of replicas & stripes.

Now you can simply rebalence the volume, exactly the same way we did for expanding the volume.

Sarath Pillai

Sarath Pillai Satish Tiwary

Satish Tiwary

Comments

check list

Could you please post the check list before&after patching/migration in linux..............................

Hi gopal,

Hi gopal,

I actually did not get your question.. can u please explain a little more what you are asking..

You want checklist to verify things in linux after kernel patching?

OR

You want want to migrate your application from windows to linux? or is it related to the current topic of gluster?

Surely we will do our level best to help you out,,please clarify the thing you asked..

Regards

gluster

it is very good document

Mandatoty locking

Hia many thanks for the step by step guide. Can you please help me on mandatory locks so that one file can not be written by multiple clients at the same time.

Regards

Nitin

Client mount HA

Thanks for a great doc. Can you shed some more light on transparent failover for the clients? If I mount the gluster volumes by running "mount.glusterfs server1:/test-volume /mnt", what happens if server1 goes offline? Would the /mnt on the client hang? I don't see how the client /mnt would change from server1:/test-volume to server2:/test-volume without some sort of virtual IP. Any help is much appreciated.

thanks,

Tom

Slow Speed

Nice guide and created stripe volume for the performance purpose. I have installed samba3 with ctdb and configured on GFS volume for windows clients and file transfer speed is only 10Mb (from and to). How to achive 200MB speed as mentioned in this guide .

Slow speed

I agree - gluster is pretty slow. I have the same problem. My devices are on a 10GbE that runs at 600MB/s and the devices themselves have 400-700MB/s local read/write access. When I use gluster on 6 nodes i get 50MB/s data access. I think the 200MB/s in this article is "aspirational" and not tested.

Gluster filesystem not claiming free space

We have 3 bricks striped volume each brick size is 4.8T so volume size is 14T if we delete data from volume or brick its will not showing free space in df -lh output. If we do du -sh we can find it. So can we not how we can claim that free space with df -lh.

shrinking a volume

Hi,

I want to shrink the volume by removing a brick. How can I do this without losing the data which is present in brick which I am going to remove..

Glusterfs

How glusterfs can gracefully handle the failover scenario? In terms of storage how scalable is glusterfs filesystem ? and will the performance be affected when more bricks/instances are added?

Glusterfs Failover and scalability

Hi ish,

Glusterfs can handle failover mechanism very easily simply because you have multiple nodes taking part in the gluster storage(provided you are using a replicated gluster).

When you mount a glusterfs file system from any one of the server, the server actually provides a file that contains details about all nodes taking part in the storage. This way failover is pretty seamless, as if one of the server stops responding another node from the cluster is selected.

Performance depends upon the kind of storage you are using. If you are using a stripped volume, performance will be much better(as read and write will be distributed across nodes.)

Replicated gluster will provide redundancy as well.

Thanks

Sarath

rebalancer

how to perform data rebalancing on the nodes?

can we find out performance of it across the nodes?

data balancing

First of all congratulations and many thanks to you for making such a good documentation. I want to find if I have few number of files and two nodes. After running the commands for data balancing(using rebalance) how to find how many files are balanced by which node? can we check the performance of each node's balancing?

Verify Gluster Rebalancing Status

Hi Madhavi,

You can actually use the below command to verify the status.

Thanks

Sarath

Gluster

Very good explanation..

200MB/s and 400MB/s

The 200MB/s and 400MB/s are a bit misleading. Given a gigabit network, any one stream won't achieve > 120MB/s before overhead (hard disk, network, os, gluster ). Therefore any client mounting those storage volumes will be capped at the same rate for its max throughput unless faster networking is used. It doesn't matter that storage pool server 1 has access to 3x120MB/s streams coming from the other 3 servers, as it can't deliver > 120MB/s to the client on a single stream unless faster networking is present between the client and the gluster nodes.

For those using, samba setting "strict locking = No" in global config seems to help my speed.

Add new comment