Logstash Tutorial: Linux Central logging server

Whenever you have a problem with any component in your architecture, the first thing that a system administrator does is to go and check the logs related to that application. Because logs can provide you an insight to what problem that application is facing.

If you are responsible for handling only a few handful of servers and applications running on them, then you can simply go and grep the logs to collect information. But imagine a situation, where you have hundreds of servers and you have very different types of applications that run on them. In such cases you cant go and run a tail and then grep or even use awk to format the output in your desired manner.

Although learning the art of formatting the output with awk is a good thing, but that just don’t serve the purpose of your troubleshooting and will take hours and hours to do the same on hundreds of servers.

The problem can be solved by having a central logging server. But even if you have a central logging server, you can only aggregate all logs at one place, but still sorting all of them and finding the problem during a disaster is a nightmare.

It will be better if we have a central logging server, where all application and system logs are collected, along with that we need a good interface, that will filter and sort different fields in the logs and populate all of that in a web interface.

Below mentioned are some points that we need to accomplish, to have a simple but efficient and scalable log management.

Integrated collection of logs at one place

Parsing of logs (So that you dont need to run tail, grep or awk to sort the output)

Storage and easy search.

Fortunately there is an open source tool available that can help us solve our problem. The tool is called as logstash.

Logstash is free, also its open source, and is released under Apache 2 license. Logstash is a very efficient log management solution for Linux. The main added advantage is that logstash can collect log inputs from the following places.

Windows Event Logs

Syslog

TCP/UDP

Files (which means any custom log file that does not come under syslog, where your application sends log data)

STDIN

The above shown places covers almost all log locations that your infrastructure can have.

Logstash is written in Jruby. And it requires Java on your machine to run.

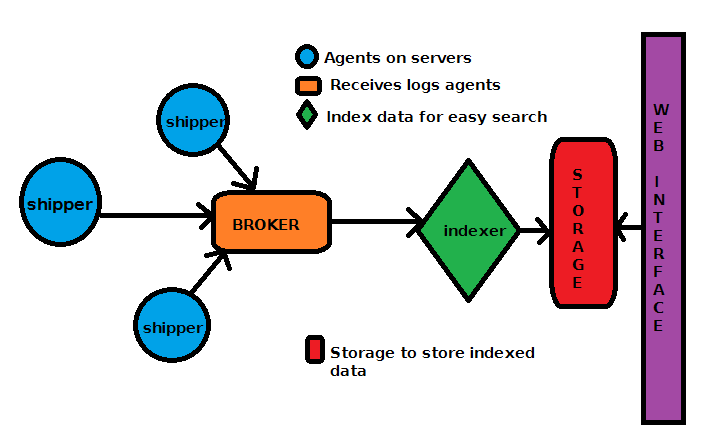

There are 5 important components inside our logstash log management tool. Let's see which are those.

A remote shipper component which will be on all the agents (clients/servers which will send their logs to our central logstash server)

Searching and Storage for the data

Web interface (Where you can search and view your log details at your specified time range)

Indexer (This will receive the log data from remote agents and will index it, so that we can search them later)

Broker (This will receive log event data from different agents, means remote servers)

Logstash architecture diagram will look something like the below. The diagram includes all the components we mentioned above.

How to install and configure Logstash?

Now let's go ahead and install our central logging server with logstash. For showing you this example configuration i will be using an Ubuntu-12.04 Server. I am using this exact same configuration in one of my architecture that has a wide variety of different applications running. Some of them are proprietary, while some of them are open source.

I am running this configuration in Amazon AWS, and my logstash server is using Amazon s3 as its storage. For showing you this example tutorial, we will be using the local system for storage.

Logstash is an open source tool that is very easy to scale. You can separate each component of it, so that you can scale it easily. We will be having all of the components in one central server as far as this tutorial is concerned.

The main prerequisite for running logstash central server is to have Java installed. Because logstash runs inside a Java Virtual Machine. So let's see how to install java on our Ubuntu system. Please remember the fact that although am showing you this example configuration in an Ubuntu machine, you can use the same method to configure logstash inside a centos/redhat system as well (Of course you need to replace the Ubuntu specific commands like apt-get with yum while configuring it in redhat/centos environment.)

So let's first install Java JDK on our central logstash server.

root@ubuntu-dev:~# apt-get install openjdk-7-jdk

Once you have installed JDK, you can confirm the same, with the below command.

root@ubuntu-dev:~# java -version

Now let's create a directory where we will place our logstash java file. Its always better to create new directory for your application to avoid confusion, and also it will make it look neat.

We will have three directories. One will hold logstash application, and the other will hold the configuration files, and the third will hold the logs realted to logstash itself.

root@ubuntu-dev:~# mkdir /opt/logstash root@ubuntu-dev:~# mkdir /etc/logstash root@ubuntu-dev:~# mkdir /var/log/logstash

Please note the fact that it is not all necessary to create the logstash folder exactly in the same location. You can create logstash folder anywhere. Or if you wish you can do it without any folder at all. The only purpose is to organize different files in a structured manner.

Now let's get inside our logstash application directory and download the logstash java jar file from its official website using wget command. This can be done as shown below.

root@ubuntu-dev:~# cd /opt/logstash/ root@ubuntu-dev:/opt/logstash# wget https://download.elasticsearch.org/logstash/logstash/logstash-1.2.2-flatjar.jar root@ubuntu-dev:~# mv logstash-1.2.2-flatjar.jar logstash.jar

This jar file we just downloaded contains even the agent and also the server.

Now please keep one thing in mind that there is no differnce in package(jar file) as far as logstash server and agents are considered. The difference is only the way you configure it. The logstash server will be running this same jar file with server specific configuration, and the logstash agents will be running this same jar file with shipper specific configurations.

We need to now install broker. If you remember the architecture diagram, the log data sent by different servers are received by a broker on the logstash server.

So the idea of having a broker is to hold log data sent by agents before logstash indexes it. Having a broker will enhance performance of the logstash server. You can use any of the broker's mentioned below.

- Redis server

- AMQP (Advanced Message Queuing Protocol)

- ZeroMQ

We will be using the first one as our broker, as it is easy to configure. So our broker will be a redis server.

Let's go ahead and install redis server by the below command.

root@ubuntu-dev:~# apt-get install redis-server

If you are using centos/redhat distribution, then first install EPEL Yum repository, to install the latest version of redis.

Redis acts like a buffer for log data, till logstash indexes it and stores it. As it is in RAM its too fast

Now we need to modify the redis configuration file so that it will listen on all interfaces, and not only localhost 127.0.0.1. This can be done by commenting out the below line in the redis configuration file /etc/redis/redis.conf.

#bind 127.0.0.1

Now restart redis server by the below command.

root@logstash-dev-qa:~# /etc/init.d/redis-server restart

Now we have our redis server listening on all network interfaces. And is ready to receive log data from remote servers. To test the redis configuration, there is a tool that comes along with redis package. Its called as redis-cli. Redis by default listens on port no 6379. and redis-cli commands will try to connect to that port, by default.

Testing redis server can be done by connecting to our redis server instance using redis-cli command and then issuing the command "PING". If all is well, you should get an output of "PONG"

root@ubuntu-dev:~$ redis-cli -h 192.168.0.101 redis 192.168.0.101:6379> PING PONG

You can alternatively issue the same command of PING, by simply telneting to the port 6379 from any remote server.

Now our redis broker is ready. But one main thing is still pending to be configured on our logstash server. Its the searching and indexing tool. The main objective of a central log server is to collect all logs at one place, plus it should provide some meaningful data for analysis. Like you should be able to search all log data for your particular application at a specified time period.

Hence there must be a searching and well indexing capability on our logstash server. To achieve this, we will install another opensource tool called as elasticsearch.

Elasticsearch uses a mechanism of making an index, and then search that index to make it faster. Its a kind of search engine for text data. Easticsearch works in a clustered model by default. Even if you have one elasticsearch node, it will still be part of a cluster you define in its configuration file (like in our case we will be having only one elasticsearch node, which will be part of a cluster ). This is done to easily achieve scalability. In future if we need more elasticsearch nodes, we can simply add another Linux host and inside its elasticsearch configuration we will specify the same cluster name, with node addresses.

Tools like elasticsearch and redis are big topic in themselves, that i cannot discuss them in complete detail in this tutorial (Because our primary objective of this tutorial is to get a central logging server with logstash ready and running). Am sorry for that. However i will surely include tutorials related to redis and elasticsearch in our upcoming posts.

Now let's go ahead and install elasticsearch on our logstash server. The main prerequisite for installing elasticsearch is Java, which we have already installed in the previous section.

Elasticsearch is available for download from the below location.

We will be downloading Debian package of elasticsearch, as we are setting up our logstash central server on an Ubuntu machine. If you visit the elasticsearch download page, you can get RPM, tar.gz packages as well. Let's download the debian package on our logstash server with wget command as shown below.

root@ubuntu-dev:~# wget https://download.elasticsearch.org/elasticsearch/elasticsearch/elasticsearch-0.90.7.deb

The next step is to simply go ahead and install elasticsearch with dpkg command as shown below.

root@ubuntu-dev:~# dpkg -i elasticsearch-0.90.7.deb

Once you have installed elasticsearch, the service should automatically get started by default. If you dont have the elasticsearch service started, then you can always do that with the below command.

root@ubuntu-dev:-#/etc/init.d/elasticsearch start

If you remember, i have previously told that elasticsearch always works in the form of a cluster. Even though if you have only a one node cluster. Installing elasticsearch and starting it, will by default make it work inside some cluster. You can find the current cluster name by accessing the elasticsearch configuration file /etc/elasticsearch/elasticsearch.yml

You will find the below two lines inside the elasticsearch yml configuration file.

#cluster.name: elasticsearch #node.ame: "Franz Kafka"

Both the above lines will be commented out by default. You need to uncomment it and rename the cluster with your desired name. For showing this example, we will name our elasticsearch cluster as "logstash". Also rename the node with your desired name. Any other future node with the same cluster name will become part of this cluster.

Once done with the renaming, restart elasticsearch service by the previously shown command for the changes to take effect.

Elasticsearch by default runs on port 9200. You will be able to double check this, by browsing the webpage <your IP address>:9200. You should gets some status, and other version details on that URL.

Now we have three main components on our logstash central logging server ready. One is our Broker which is redis server, Second is logstash java jar file, and the third is the indexing and searching tool called elasticsearch. But we have not yet told our logstash server (which is basically a simple jar file we downloaded previously and kept at /opt/logstash/) about our broker, and elasticsearch.

We need to first create a configuration file for logstash at /etc/logstash directory we created, and describe about these two components (elastisearch & redis). Once we have our configuration file describing these components ready, we can start our logstash server using the jar file inside /opt/logstash/. While starting our logstash server we will provide our configuration file which will be inside /etc/logstash as an argument. We also need to provide a logging location where logstash server events will be logged (this is regular /var/log/logstash folder.)

Please dont confuse with the /var/log/logstash directory with the central logging location our logstash server will be using. Logs from all different servers will be stored inside elasticsearch storage location. We will see that in some time.

We can name our logstash configuration file anything we like. Whatever name you provide does not matter, but you need to simply pass that file as an argument while starting our logstash server. Our central logstash configuration file will look something like the below. We will call our logstash server configuration file server.conf. Create a file named server.conf inside /etc/logstash/, and copy paste the below contents inside.

input {

redis {

host => "192.168.0.106"

type => "redis"

data_type => "list"

key => "logstash"

}

}

output {

stdout { }

elasticsearch {

cluster => "logstash"

}

}

Logstash has this concept of different blocks inside its configuration. It has got its input block, which tells where to get inputs from. And an output block, which tells where to give the output for storage and indexing.

Our input and output blocks are very simple to understand. It says take input from redis instance on 192.168.0.106 (which is our host itself) and whatever logstash finds in redis mark it with a key of "logstash".

Once the data from redis input is processed, give them to elasticserach cluster named "logstash" for storage and search.

You might be thinking how logstash understands these language we described while creating the server.conf file. How will it understand what is elasticsearch, how will it understand what is redis etc. Dont worry about that, the logstash jar file (The single component you need for logstash to run) knows about these different types of brokers and inputs and output types.

Now our main logstash central server configuration file is ready. Let's go ahead and start our logstash service by using the jar file in /opt/logstash/ directory. You can start logstash by the below command.

#java -jar /opt/logstash/logstash.jar agent -v -f /etc/logstash/server.conf --log /var/log/logstash/server.log

Although you can start logstash server with the above shown command. A better way to start/stop/ and restart logstash service is to make an init script for it. There is already a nice init script made for logstash at the below github location. Modify the script according to your configuration file name and it should work.

Logstash Init Script to start and stop the service

Please Let me know through comments if you are unable to get it working through the above init script.

Now we have two tasks pending. One is the kibana web interface for logstash. And the second is configuring our first agent (client server) to send logs to our central logstash server.

How to configure kibana web interface for logstash?

logstash comes pre-built with a kibana console. This default kibana console can be started by the below command. And remember the fact which i previously told. All components required for logstash to run comes pre-built inside the single jar file we downloaded. Hence the web component with kibana can also be started by using the logstash.jar file as shown below.

#java -jar /opt/logstash/logstash.jar web

But a better method to configure a kibana console is to have nginx web server running along with a document root with kibana console. Hence to run kibana with nginx, we need to first install nginx. As we are in doing all this in an ubuntu 12.04 server, installing nginx is a single command away.

#apt-get install nginx

If you are using a Red hat or centos based distribution, you can install nginx by following the below post.

Read: How to install nginx web server in Red hat and Centos

Now as we have our nginx web server installed, we need to first download the kibana package that can be served using nginx. This can be done by using wget command with the url as shown below. Please download the below file in the nginx doc root (by default ubuntu nginx doc root is located at /usr/share/nginx/ )

#wget https://github.com/elasticsearch/kibana/archive/master.zip

Unzip the downloaded file and then change the default document root for nginx (simply modify the file /etc/nginx/sites-available/default, and replace the root directive pointing to our kibana master folder we just uncompressed. ), so that it points to our newly unzipped kibana master directory.

By default the kibana console package we downloaded for nginx will try connecting to elasticsearch on localhost. Hence there is no modification required.

Restart nginx and point your browser to your server ip and it will take you to the default kibana logstash console.

How to configure logstash agents to send logs to our logstash central server?

Now let's look at the second thing remaining. We need to configure our first agent to send logs to our newly installed logstash server. Its pretty simple. I have outlined the steps below to configure the first logstash agent.

Step 1

Create the exact directory structure, we did for logstash central server, as shown below. Because for agents also we need configuration file in /etc/logstash, log files inside /var/log/logstash, and logstash main jar file inside /opt/logstash/.

root@ubuntu-agent:~# mkdir /opt/logstash root@ubuntu-agent:~# mkdir /etc/logstash root@ubuntu-agent:~# mkdir /var/log/logstash

Step 2

Download logstash jar file (The same jar file we used for logstash server ) and place it inside the directory /opt/logstash (As i told in the beginning of this article, the directory structure is just to organize it in a better manner. You can place configuration file, jar file at your desired location.)

root@ubuntu-agent:~# cd /opt/logstash/ root@ubuntu-agent:/opt/logstash# wget https://download.elasticsearch.org/logstash/logstash/logstash-1.2.2-flatjar.jar root@ubuntu-agent:~# mv logstash-1.2.2-flatjar.jar logstash.jar

Step 3

Create the configuration file for our agent. This part is slightly different. Here the inputs will be the files (the log files that you want to send to our central log server), and the output will be our redis broker installed on the central logstash server.

The input block will contain a list of files(log files of any application or even syslog ) that needs to be sent to our central logstash server. Let's see how the agent configuration file will look like.

Create a file in /etc/logstash/ called shipper.conf or say agent.conf or anything you like. The content should look like the below.

input {

file {

type => "syslog"

path => ["/var/log/auth.log", "/var/log/syslog"]

exclude => ["*.gz", "shipper.log"]

}

}

output {

stdout { }

redis {

host => "192.168.0.106"

data_type => "list"

key => "logstash"

}

}

If you see, am sending my /var/log/auth.log and /var/log/syslog to our central logstash server.

Also if you see the output block, we are sending these data to redis server on 192.168.0.106 (which is our central logstash server we installed. Replace this with your central logstash server ip address ). Remember the fact that the input block on central logstash server was redis. Hence whatever the agents send to redis, logstash central server will collect it from redis and give the filtered data back to elasticsearch(because the output block on central server was elasticsearch)

As we have our logstash agent ready with its configuration, its better to start and stop this agent with an init script similar to our logstash central server. the init script for logstash agent can be found at the same githup location below. Refer to the shipper section in the below URL for the logstash agent init script. Please modify the configuration file lication appropriately to suite your config files.

And that's it. Our first agent is now sending log data to our central log server.

You should now be able to see these messages appear on our kibana console we configured on central logstash server. Please navigate to the default logstash dashboard.

Although we now have a central logging server for our architecture that receives logs from different agents, the data we are collecting is not good and meaningful. Because we are simply forwarding the logs from agents to a central server, even though kibana shows us the data in the web interface, its not meaningful and good enough for analysis.

If you remember our main objective behind having a central logging server like logstash was not just for storing all logs at one place.The objective was to get rid of doing tail, grep and awk against these log messages.

So we need to filter the data that we are sending to our central server, so that kibana can show us some meaningful data. Let's take the example of a DNS query log. The query log looks something like the below.

20-Nov-2013 06:55:49.946 client 192.168.0.103#40622: query: www.yahoomail.com IN A + (192.168.0.134)

We need to filter the above shown log before sending it to our logstash server. When i say filtering it means we need to tell what each field in that log message is. What is the first field, what is the second field etc. So that whenever i want to troubleshoot and find out what was the dns queries like between 10AM and 11AM today morning.

The output i should get for analysis in kibana console must be something like the time stamp, the source ip, the dns query type, the queried domain name, etc etc.

I will be writing a dedicated post for each of the topics mentioned below. Till then stay tuned.

- How to filter out logs send to logstash.

- How to send windows event logs to logstash.

- How to manage storage with elasticsearch in logstash.

Hope this article was helpful in getting your logstash central log server and agents ready and running.

Sarath Pillai

Sarath Pillai Satish Tiwary

Satish Tiwary

Comments

windows event logs

Hi can you please write a tutorial for sending windows event logs!!

Parsing the logs recived on TCP in logstash & add to elasticsear

Hello,

i am able to receive logs on Tcp in logstash server and also able to dump it in elastic search.

Can you guide me on how can i filter the received logs and add to specific index and type so that i can search it in elastic search .

Also i need you help on to create geoip point in elastic search by filtering log .

Thanks .

Hi Kushal,

Hi Kushal,

Parsing logs and filtering them requires a complete post dedicated to it(as that's the main use of logstash, which enables easy search with elasticsearch.).

I will write the post on that as soon as possible.

Filtering requires grok regular expressions (either on the agent or the server). To begin with grok filtering you can use the grok debugger below.

http://grokdebug.herokuapp.com/

Most of the logs can be filtered using already available patterns. You can access them in the below github url.

https://github.com/logstash/logstash/tree/master/patterns

Stay tuned. I will come up with a post on this.

Regards

Sarath

issue

Hi,

i would like to thank you, because thanks to you, i can work on Logstash, Elasticsearch and Kibana, but, not totally. i'm blocked, the agent.conf doesn't send logs to my server. I don't know why ? and i don't know how to complete the logstash init script.

ps : Of course i tried to understand myself how to replace and complete the script, but it was a fail.

Can you help me please ?

Postfix Logs Filtering

Hello,

First of all I would like to thank you for the excellent tutorial. I have configure logstash now and its working perfectly. Now I have to use logstash to filter postfix logs. Can you help me out in this. I have used grok filter as you mention but don't know why its not working. Do I have to compile grok filter?

Regards,

Murtaza

Hi Murtaza,

Hi Murtaza,

I assume you are filtering logs on the logstash agent itself (your postfix server.). In that case, add the below filter in your shipper.conf

grok {

type => "postfix"

match => [ "message", "%{SYSLOGTIMESTAMP:timestamp} %{SYSLOGHOST:hostname} postfix\/(?<component>[\w._\/%-]+)(?:\[%{POSINT:pid}\]): (?<queueid>[0-9A-F]{,11})" ]

add_tag => [ "postfix", "grokked"]

}

So you shipper.conf should look like the below if you have followed this article while configuring logstash..

input {

file {

type => "postfix"

path => ["/var/log/mail.log"]

exclude => ["*.gz"]

}

}

grok {

type => "postfix"

match => [ "message", "%{SYSLOGTIMESTAMP:timestamp} %{SYSLOGHOST:hostname} postfix\/(?<component>[\w._\/%-]+)(?:\[%{POSINT:pid}\]): (?<queueid>[0-9A-F]{,11})" ]

add_tag => [ "postfix", "grokked"]

}

output {

stdout { }

redis {

host => "192.168.0.106"

data_type => "list"

key => "logstash"

}

}

Please let me know if that worked..

Regards

Hi Sarath,

Hi Sarath,

It Worked Man :) but I want to let you know that I had compiled grok after my first post and I test a pattern using this website "http://logstash.net/docs/1.3.3/filters/grok" and it filtered a test pattern. So now today I saw your reply and I did the same thing as you said and it filtered the logs perfectly. So I am little confused here that whether grok compilation do the trick or just simply adding the grok pattern in shipper.conf file do it. Another thing I add 'filter' parameter before grok because it gives me error ":message=>"Error: Expected one of #, input, filter, output at line 8, column 1 (byte 102) after "}" , so I add a filter parameter and it resolve this error.

My final shipper.conf is this:

input {

file {

type => "postfix"

path => ["/var/log/maillog"]

exclude => ["*.gz", "shipper.log"]

}

}

filter {

grok {

type => "postfix"

match => [ "message", "%{SYSLOGTIMESTAMP:timestamp} %{SYSLOGHOST:hostname} postfix\/(?<component>[\w._\/%-]+)(?:\[%{POSINT:pid}\]): (?<queueid>[0-9A-F]{,11})" ]

add_tag => [ "postfix", "grokked"]

}

}

output {

stdout { }

redis {

host => "127.0.0.1"

data_type => "list"

key => "logstash"

}

}

Thanks once again :)

Regards,

Murtaza

Hi Murtaza,

Hi Murtaza,

You are very much correct...It was my mistake..i somehow missed out that filter thing, while replying to your comment.

Anyways good to know that it worked...

If you want to filter your custom application logs i would always suggest to use the below web app to confirm its working(i usually use that web app before applying it on a server)

http://grokdebug.herokuapp.com/

Regards

Sarath

Postfix Logs Filtering

Hi Sarath,

Hope you are doing good. I would like to appreciate your support and quick response on my issue :) and yes I have made a custom pattern for my postfix logs using grok debugger and it works perfectly. I would like to share my pattern so that other users can also get a help from it :).

grok {

type => "postfix"

match => [ "message", "%{SYSLOGTIMESTAMP} %{SYSLOGHOST} %{SYSLOGPROG}: (?<QUEUEID>[A-F0-9]+): (?<EMAILTO>to=<[a-z=]+.@[0-9a-z.+]+.),(?<RELAY>.*:\d+),(?<DELAY>.delay=[0-9]+(.[0-9]+)),(?<DELAYS>.delays=[0-9]+(.[0-9]+)+),(?<DSN>.dsn=[0-9]+(.[0-9]+)+),(?<STATUS>.*)"

add_tag => [ "postfix", "grokked"]

}

Thanks again.

Regards,

Murtaza

Hi Murtaza,

Hi Murtaza,

Thanks for sharing it over here...

Regards

Simply Superb..!

Really its very useful and fully narrated.. I ve a doubt.. Can i use this architecture without any broker?

Hi,

Hi,

Can I install all stuff in one machine?

When I link to http://<myIp>, the website informs me the Kibana version is too old, so I updated to the latest version which is http://download.elasticsearch.org/kibana/kibana/kibana-latest.zip

then I link to my website again, it nforms me the elasticsearch version is too old, so I

updated to version 1.0.1 which is https://download.elasticsearch.org/elasticsearch/elasticsearch/elasticse...

dpkg -i elasticsearch-1.0.1.deb

After that, I link to my website again, it shows normal but no logs are showed on the Kibana at all.

Here is my /var/log/logstash/server.log:

...

log4j, [2014-03-21T19:26:19.734] WARN: org.elasticsearch.discovery.zen: [Rose] master_left and no other node elected to become master, current nodes: {[Rose][G8iPEwGdTX2LwAmx2L9DXg][inet[/10.90.1.46:9301]]{client=true, data=false},}

log4j, [2014-03-21T19:26:19.734] INFO: org.elasticsearch.cluster.service: [Rose] removed {[Andy][LaWpYMl5QYa-txb3zz42_Q][inet[/10.90.1.46:9300]],}, reason: zen-disco-master_failed ([Andy][LaWpYMl5QYa-txb3zz42_Q][inet[/10.90.1.46:9300]])

log4j, [2014-03-21T19:26:22.836] WARN: org.elasticsearch.discovery.zen.ping.multicast: [Rose] failed to read requesting data from /10.90.1.46:54328

java.io.IOException: Expected handle header, got [8]

at org.elasticsearch.common.io.stream.HandlesStreamInput.readString(HandlesStreamInput.java:65)

at org.elasticsearch.cluster.ClusterName.readFrom(ClusterName.java:64)

at org.elasticsearch.cluster.ClusterName.readClusterName(ClusterName.java:58)

at org.elasticsearch.discovery.zen.ping.multicast.MulticastZenPing$Receiver.run(MulticastZenPing.java:402)

at java.lang.Thread.run(Thread.java:744)

...

How can I solve the problem and see logs are showed on the Kibana website(my ip)?

Thank you very much.

Andy

Hi Andy,

Hi Andy,

Can you remove your elasticsearch data directory and configfiles completely from the old install and re install again?...

Also please configure the cluster name as mentioned in this tutorial..Coz the kibana package will by default look for elasticsearch at localhost 9200.And logstash will store data at a cluster named logstash.Please let me know..

A few other users also complained the same issue after upgrading elasticsearch/kibana..

Regards

Sarath

logstash-forwarder to ship windows event logs?

I'm using logstash-forwarder to ship windows logs to our central logging (logstash) server.

What do I need to configure my "logstash-forwarder.conf" so that it would be able to ship wndows event logs to the logging server.

thx.

Shipping windows event logs to logstash server

Hi Ricky,

For sending windows event logs to central logstash server, you need to first install nxlog on windows, and configure it to send logs to a localhost port. For example, send logs to localhost port 3999 and then give that tcp port as input to logstash config file as shown below.

input {

tcp {

port => 3999

format => "json"

}

}

You nxlog configuraion file will have a configuration like the one shown below..

<Output out_tcp>

Module om_tcp

Port 3999

Host localhost

</Output>

Let me know...

Regards

Sarath

Shipping log file from one machine to another

Hi. I am new to this logstash.

Please correct me if i am wrong..

My objective is to send the logs (generated using log4j)from a s/m having IP (192.XX.XX.XX) to a centralized server having IP (192.YY.YY.YY).

What i have done is

i) Executed the redisServer in server.

ii) created a config file in the other machine.

Shipper.conf

input {

file {

type => "syslog"

path => ["C:/Users/nidhin/Documents/test.log"]

}

}

output {

stdout { debug => true debug_format => "json"}

redis { host => "192.YY.YY.YY" data_type => "list" key => "logstash" }

}

ii) executed the command "java -jar logstash-1.1.7-monolithic.jar agent -f shipper.conf"

I made some changes in the test.log file to see whether there is any change in dump.rdb file. But it is not getting updated .

please help.

How to specify a user (different that root) in host for redis

Hi,

So in my shipper, I want to send logs to a machine where redis is running, but it is not with the root user, it is with a different one. How can I specify the user on that machine whee it should send the logs.

Like how we do user@ip.address to ssh as a user, what will the syntax for redis output for lostash be? I tried it in the format user@ip.address but it is not working, it says something like :exception=>#<SocketError: initialize: name or service not known>,

Any help is appreciated,

Thank You !

User and Password for redis

Hi Anali,

The logstash output redis block, do accept a lot of parameters.

Check this official link below for the complete list of redis in logstash. Try with the ones mentioned over there.

http://logstash.net/docs/1.1.13/outputs/redis

Let me know.

Thanks

JBOSS LOGS

Apache logs are importing fine using the below but does anyone know of a config for jboss /jboss7 logs?

filter {

grok {

match=> { message => "%{COMBINEDAPACHELOG}" }

}

date {

locale => "en"

match => [ "timestamp", "dd/MMM/yyyy:HH:mm:ss Z" ]

jboss logs in logstash

Hi Ned,

For getting jboss logs filtered to logstash, you need to take care of multiple things..Like we need java errors all combined as a single line, and lines starting with a space should be part of the previous line etc etc.

use the below snippet on your shipper.log to get started with filtering jboss logs in logstash.

input {

file {

type => "jboss"

path => ["/path/to/jboss/logs/*.log" ]

exclude => ["*.gz", "shipper.log"]

}

}

filter {

grok {

type => "jboss"

pattern => ["%{TIME:jboss_time} %{WORD:jboss_level} %{GREEDYDATA:jboss_message}"]

add_tag => "got_jboss_message"

}

multiline {

type => "jboss"

pattern => "^%{JAVACLASS}\:"

what => "previous"

}

multiline {

type => "jboss"

pattern => "^\s"

what => "previous"

}

multiline {

type => "jboss"

pattern => "^Caused by"

what => "previous"

}

}

output {

stdout { }

redis {

host => "your redis server ip here"

data_type => "list"

key => "logstash"

}

}

Hope that helps..

Let me know..

Thanks

RE:jboss logs in logstash

Sarath ,

That's brilliant thanks very much for that, it's pulling in my jboss logs as I speak, so a big thanks to you for that.

logstash

Hi,

I followed your tutorial on logstash and I thought it was well written. However, I will be happy if you could explain this bit in quotation marks.

"Unzip the downloaded file and then change the default document root for nginx (simply modify the file /etc/nginx/sites-available/default, and replace the root directive pointing to our kibana master folder we just uncompressed. ), so that it points to our newly unzipped kibana master directory.

By default the kibana console package we downloaded for nginx will try connecting to elasticsearch on localhost. Hence there is no modification required.

Restart nginx and point your browser to your server ip and it will take you to the default kibana logstash console."

Thanks

Hi ,

Thanks for this post I understand Logstash tool in 1 hr and successfully configured bcz of this such simple and understandable post thank you very much.

chnaging the dashboard

Sarath,

I have used the below conf file to import old logs into logstash and I can see them in kibana but only if I use the guided.json dashboard. However If i import a new dashboard it tells me there are

"No results There were no results because no indices were found that match your selected time span"

Even if I re-import logs I still get nothing, even if I rename my new json dashboard to guided.json the logs are only seen in the old guided.json dashboard.

Here is my logstash conf file and below it is the dashboard I want to use.

****************************apache.conf**************************************

input {

stdin {

type => "apache"

}

}

filter {

grok {

match=> { message => "%{COMBINEDAPACHELOG}" }

}

date {

locale => "en"

match => [ "timestamp", "dd/MMM/yyyy:HH:mm:ss Z" ]

timezone => "Europe/Rome"

}

}

output {

elasticsearch {

cluster => "elasticsearch.local"

host => "127.0.0.1"

protocol => http

index => "my-logs"

index_type => "apache"

}

}

**********************************************************************************

This is the json I want to use

https://gist.github.com/jordansissel/9698373

Problems with init script

Hey

Thank you for this wonderful tutorial. However, I am having problems with the logstash script.

I follow the Gist you provided but I run into problems.

logstash@asgard:~$ service logstash-reader status

/etc/init.d/logstash-reader: invalid arguments

* could not access PID file for logstash-reader

logstash@asgard:~$ service logstash-reader start

/etc/init.d/logstash-reader: invalid arguments

* could not access PID file for logstash-reader

* Starting reader logstash-reader

/etc/init.d/logstash-reader: 28: /etc/init.d/logstash-reader: start-stop-daemon: not found

[fail]

Do you have any ideas what could be the problem? Thank you.

error while parsing log files

When i defined the pattern for parsing apache tomcat and application log files in logstash we are getting the following error . Sample log file is : 2014-08-20 12:35:26,037 INFO [routerMessageListener-74] PoolableRuleEngineFactory Executing the rule -->ECE Tagging Rule

config file is : filter{ grok{ type => "log4j" #pattern => "%{TIMESTAMP_ISO8601:logdate} %{LOGLEVEL:severity} [\w+[% {GREEDYDATA:thread},.*]] %{JAVACLASS:class} - %{GREEDYDATA:message}" pattern => "%{TIMESTAMP_ISO8601:logdate}"

#add_tag => [ "level_%{level}" ]

}

date { match => [ "logdate", "YYYY-MM-dd HH:mm:ss,SSS"]

} }

Error faced : Unknown setting 'timestamp' for date {:level=>:error}

Great tutorial!

Great tutorial!

Did you ever get around covering these topics?

How to filter out logs send to logstash.

How to send windows event logs to logstash.

How to manage storage with elasticsearch in logstash.

Nice presentation...few questions..

I don't see how this is all tied into S3. What's the secret?

Finally, how does all this fit into using python-logstash?

Thanks.

Error in Kibana Page

Hi Sarath

Thanks for details steps above.

I am trying to setup a central logging with above steps and I am getting below error message while accessing Kibana webpage

Error Could not contact Elasticsearch at http://XX.XX.XX.XX:9200. Please ensure that Elasticsearch is reachable from your system -Using Firefox

And

No results There were no results because no indices were found that match your selected time span Using IE . Please suggest what setting I need to look for

Re: Error in Kibana Page

Hi Pankaj,

Kibana requires access to elastic search port 9200 by default. So basically the system from which you are accessing the kibana ui requires access to ES 9200 port,

if the system from where you are accessing the ui has only access to the Kibana web ui port, you need to open es port as well, else you will get that error.

Thanks

Sarath

Tomcat logs in logstash

Hi Sarath,

Can you please help me to fix issue with tomcat7 logs.

Logs are like these:

[2015-03-23 13:57:16,866 ] INFO AprLifecycleListener -- OpenSSL successfully initialized (OpenSSL 0.9.8e-fips-rhel5 01 Jul 2008)

[2015-03-23 13:57:17,719 ] INFO AbstractProtocol -- Initializing ProtocolHandler ["http-bio-172.28.129.81-8443"]

[2015-03-23 13:57:18,276 ] ERROR AbstractProtocol -- Failed to initialize end point associated with ProtocolHandler ["http-bio-172.2

8.129.81-8443"]

java.net.BindException: Address already in use /172.28.129.81:8443

at org.apache.tomcat.util.net.JIoEndpoint.bind(JIoEndpoint.java:406)

at org.apache.catalina.startup.Bootstrap.main(Bootstrap.java:455)

Caused by: java.net.BindException: Address already in use

at java.net.ServerSocket.bind(ServerSocket.java:376)

at org.apache.tomcat.util.net.JIoEndpoint.bind(JIoEndpoint.java:396)

... 17 more

Config file is below :

input {

file {

path => "/home/tomcat7/logs/tomcat.log"

type => "tomcat"

}

}

if [type] == "tomcat" {

multiline {

patterns_dir => "/home/logstash-1.4.2/patterns/custome-patterns"

pattern => "(^%{TOMCAT_DATESTAMP})|(^%{CATALINA_DATESTAMP})"

negate => true

what => "previous"

}

if "_grokparsefailure" in [tags] {

drop { }

}

grok {

patterns_dir => "/home/logstash-1.4.2/patterns/custome-patterns"

match => [ "message", "%{TOMCATLOG}", "message", "%{CATALINALOG}" ]

}

date {

match => [ "timestamp", "yyyy-MM-dd HH:mm:ss,SSS Z", "MMM dd, yyyy HH:mm:ss a" ]

}

}

}

output {

elasticsearch { host => poc1 protocol => http }

stdout { codec => rubydebug }

}

Pattern file is below :

# Java Logs

JAVACLASS (?:[a-zA-Z$_][a-zA-Z$_0-9]*\.)*[a-zA-Z$_][a-zA-Z$_0-9]*

#Space is an allowed character to match special cases like 'Native Method' or 'Unknown Source'

JAVAFILE (?:[A-Za-z0-9_. -]+)

#Allow special <init> method

JAVAMETHOD (?:(<init>)|[a-zA-Z$_][a-zA-Z$_0-9]*)

#Line number is optional in special cases 'Native method' or 'Unknown source'

JAVASTACKTRACEPART %{SPACE}at %{JAVACLASS:class}\.%{JAVAMETHOD:method}\(%{JAVAFILE:file}(?::%{NUMBER:line})?\)

JAVATHREAD (?:[A-Z]{2}-Processor[\d]+)

JAVALOGMESSAGE (.*)

# MMM dd, yyyy HH:mm:ss eg: Jan 9, 2014 7:13:13 AM

CATALINA_DATESTAMP %{MONTH} %{MONTHDAY}, 20%{YEAR} %{HOUR}:?%{MINUTE}(?::?%{SECOND}) (?:AM|PM)

# yyyy-MM-dd HH:mm:ss,SSS ZZZ eg: 2014-01-09 17:32:25,527 -0800

TOMCAT_DATESTAMP 20%{YEAR}-%{MONTHNUM}-%{MONTHDAY} %{HOUR}:?%{MINUTE}(?::?%{SECOND}) %{ISO8601_TIMEZONE}

CATALINALOG %{CATALINA_DATESTAMP:timestamp} %{JAVACLASS:class} %{JAVALOGMESSAGE:logmessage}

# [2014-01-09 20:03:28,269 ] ERROR com.example.service.ExampleService -- something compeletely unexpected happened...

TOMCATLOG \[%{TOMCAT_DATESTAMP:timestamp} \] %{LOGLEVEL:level} %{JAVACLASS:class} -- %{JAVALOGMESSAGE:logmessage}

Can you please help me to fix this.

Logstash Build

Hi Sarath,

your tutorial is excellent. could you please get the below topics as well.

How to filter out logs send to logstash.

How to send windows event logs to logstash.

How to manage storage with elasticsearch in logstash.

shipping logs from one computer another

Hi Sarath,

I am new to this side with the help of tutorial I installed Redis-server, logstash, Filebeat, ELK and kibana but not able to customize kibana and how can I make sure i recieving logs from local computer and if possible can you provide the tutorial for send logs from one machine to another via logstash.

Logstash init scripts

I am new to scripting, and I am using Amazon Linux(of AWS Cloud Services).

Line No 13: ./lib/lsb/init-functions : file not found

this file was not there, so i used YUM INSTALL '/lib/lsb/init-functions'. Now the problem lies here that, the methods/cmd used in the scripts, is not there in this file. Ultimately everything results into COMMAND NOT FOUND for line 40,27,28 and 31.

Note:1. line nos are w.r.t "reader" file.

2. apt-get and update-rc.d is not supported

Add new comment