How to Copy Files from one s3 bucket to another s3 bucket in another account

Simple Storage Service(s3) offering from AWS is pretty solid when it comes to file storage and retrieval. It has a good reputation of infinite scalability and uptime. You can use s3 for storing media files, backups, text files, and pretty much everything other than something like a database storage. Usually applications can directly interact with S3 API to store and retrieve files.

It's also highly secure, and access is only granted on a policy based method for aws user accounts(also called IAM accounts). You need an Access Key and Secret Key pair to do operations on an s3 bucket.

Bucket is nothing but a construct to uniquely identify a place in s3 for you to store and retrieve files. You need a bucket to store files. You can have as many buckets as you want. But files will be stored in a bucket. You can use aws cli, or other command line tools to interact/store/retrieve files from a bucket of your interest.

Always remember the fact that the name space for bucket name is common in the whole of s3 across all accounts. Which means, if somebody in the world has a bucket named mybucket, you cannot have a bucket with that name.

S3 takes care of scaling the backend storage as per your requirement, and your application needs to be programmed to interact with it. It also offloads the responsibility of serving files from the application to the AWS S3 API.

If a user in an aws account has proper permissions to upload and download stuff from multiple buckets in that account, you can pretty much copy files between buckets as well(well buckets in the same account).

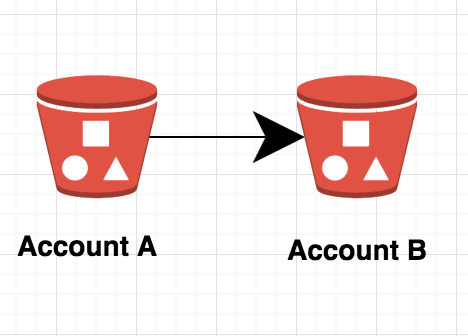

Recently i had a requirement where files needed to be copied from one s3 bucket to another s3 bucket in another aws account. Yeah that's correct. S3 offers something like that as well.

You can basically take a file from one s3 bucket and copy it to another in another account by directly interacting with s3 API. But this will only work if you have proper permissions. Although it appeared to be quite simple and straightforward at first, I could not nail it out, simply due to the plethora of articles available in the internet with different permission sets on this subject. But none worked for me. Hence am writing this, so that this can be helpful to others struggling to find a solution for this.

Basically we are going to use one access key and one secret key to carry out this operation. Well you always need this key pair to do anything on an s3 bucket programmatically. Basically we will need one single user who has proper permissions on both the buckets for this copy operation.

Things to do on Account 1(Am considering it as Source Account from where files will be copied):

Step 1: Here we need an s3 bucket(if it already exists, well and good). Else go ahead and create an s3 bucket by going into aws s3 web console.

Step 2: We need to attach a bucket permission policy to the bucket created in previous step.

This step will actually delegate the required permission to the other aws account(destination account). Every AWS Account has an account ID number. We will be giving/delegating access to the destination account number in this policy. The policy looks like the below.

{

"Version": "2008-10-17",

"Id": "Policy1357935677554",

"Statement": [

{

"Sid": "CrossAccountList",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::111111111111:root"

},

"Action": "s3:ListBucket",

"Resource": "arn:aws:s3:::examplebucket"

},

{

"Sid": "CrossAccountS3",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::111111111111:root"

},

"Action": "s3:*",

"Resource": "arn:aws:s3:::examplebucket/*"

}

]

}

Bucket policy can be accessed by going to AWS S3 ---> Select your Bucket (in the above case its examplebucket) ---> Permissions ----> Policy.

You can paste the above content inside the policy editor. Remember to change 111111111111 to relevant account ID of the destination AWS account. And also change examplebucket to your source bucket name.

You can find the destination account ID, by navigating to myaccount option in the top right drop down (in the destination account).

We actually delegated permissions to do s3 operations on our examplebucket to another AWS account ID. So the owner of the destination account(whose ID is mentioned in the policy) will now have proper permissions.

But the destination account holder/owner still needs to create another user for copying files and delegate the permission it received from source account to that new user.

Things to do on Account 2(Am considering it as Destination Account to where files will be copied):

Step 1: Create an IAM user by logging into the destination aws account as root user(ie: aws root account user). You will get an option to download the access_key and secret_keys for this new user once its created. Save this key pairs, as we will be using it to do the copy operation from source account to this account.

Step 2: From standard policies available, select s3 full access(AmazonS3FullAccess). This will give this user access to all s3 buckets on this aws account. That is. This newly created user will have all access to all buckets on this destination account. So that we can copy files from source to any destination bucket on this account.

Step 2: Now, Create a custom policy(you can give whatever name you like to the policy in the aws console. Its just an identifier) with the below content. This will grant permission to access the source bucket on the source s3 account. Paste the below json into the policy. Make sure to modify examplebucket to appropriate source bucket name.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowSourceBucket",

"Effect": "Allow",

"Action": [

"s3:*"

],

"Resource": [

"arn:aws:s3:::examplebucket",

"arn:aws:s3:::examplebucket/*"

]

}

]

}

Step 3: Attach the above created policy to the user we created in step 1.

Now let's go ahead and test if its working or not. You can use any of the below method to test our configuration.

- AWS CLI

- S3CMD

- Any other Language SDK(java sdk, ruby, or even Python)

Let's see the two simplest method to test it out. You can first install aws cli on any Linux platform using python pip.

Step 1:

apt-get update && apt-get install python-pip -y pip install awscli

Step 2:

aws configure

This step will ask for the access_key and secret_key pairs. You can paste the key pair you downloaded while creating the user on the destination bucket.

OR you can alternatively create a file under the home directory of the user in the path ~/aws/.config. The content should be the below(replace your specific access key and secret key).

#cat ~/.aws/config [default] aws_access_key_id = JHSJHGJHGWLTSKVMWEJ aws_secret_access_key = UZnOgX4SDkkenwkgemoIJkmSWSlas6iE+qMXN+Y2 region = us-east-1

aws configure command also does the same thing of creating the above file(it does by prompting you the details of access and secret key pairs).

You can test copying files from source s3 bucket to destination s3 bucket(on another account) by firing up the below command.

aws s3 cp s3://examplebucket/testfile s3://somebucketondestination/testfile

Replace examplebucket with your actual source bucket .

Replace somebucketondstination any bucket of your interest on destination.

Replace testfile with any file you have on the source bucket to copy.

Now, let's try and do this same testing using another tool called s3cmd. Its pretty straight forward to test in Ubuntu machine. You can following the below steps.

Step 1:

apt-get install s3cmd

Step 2:

s3cmd --configure

This will ask for the access and secret key pairs. As usual copy and paste the key pairs you downloaded while creating the user on the destination account.

Step 3:

s3cmd cp s3://examplebucket/testfile s3://somebucketondestination/testfile

don't forget to do the below on the above command as well.

Replace examplebucket with your actual source bucket .

Replace somebucketondstination any bucket of your interest on destination.

Replace testfile with any file you have on the source bucket to copy.

Sarath Pillai

Sarath Pillai Satish Tiwary

Satish Tiwary

Add new comment